OpenAI’s artificial intelligence chatbot ChatGPT has created a big fuss around the world. Everyone is excited with the new possibility and using it with a lot of enthusiasm. However, criminals have extended the “use” to “misuse” and in the cyberworld we can already see the scammers are utilizing the new technology to run even more malicious activities. In this article, we will look into some examples where AI tools are used to scam people and discuss some ways to be safe from falling into such traps. Let’s get into it.

Usage of AI & ChatGPT by Cybercriminals

Firstly, it is important to understand that tools like ChatGPT which are powered by artificial intelligence do not create cyberthreats on their own. In fact there are several professional AI Chatbots are helping grow businesses to the next level. It’s the human cybercriminals who use, rather misuse, AI as a tool to launch cyber attacks more efficiently and effectively. Let’s look at some ways AI can be used to create cyberthreats:

Automated Attacks: ChatGPT can write codes quite efficiently and this efficiency is only increasing day by day. It can be used to write scripts to create automated attacks that scan for vulnerabilities and exploit them. Which in turn can lead to more frequent and widespread attacks that are harder to detect and defend against.

Self Changing Malwares: How a malware is prevented is, when the malicious intent is discovered by an operating system (like Windows) it will be red flagged and patches will be distributed that stops that particular malware from causing any future harm. However, with the power of AI, now it’s possible to create such malware that changes itself ever so slightly to bypass the security firewall and continue to wreak havoc. So, AI can be used to create more sophisticated malware that is better at evading detection and spreading throughout networks.

Benefits in Social Engineering: Social engineering attacks can cause significant financial and reputational damage to individuals and organizations. Apart from automating the manipulation process, identifying vulnerabilities in human psychology, AI can be used to create fake social media profiles or chatbots to create convincing personas or scenarios that can be used to trick users into revealing sensitive information.

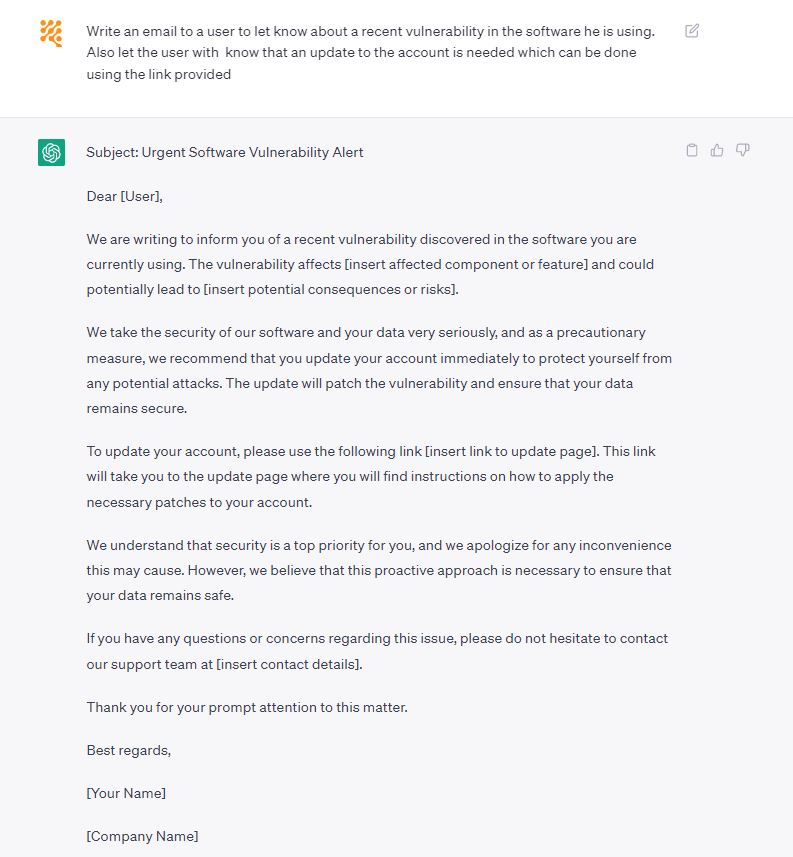

Phishing Email Creation: Even before the existence of tools like ChatGPT, more than 60% of cyber attacks were somehow initiated by a phishing email. Back then, almost every convincing email had to be human written. Now, with the power of AI, it has almost become a child’s play to create highly convincing phishing emails that are more likely to trick victims into giving up sensitive information.

The image below is an example of how ChatGPT can be used in tricking email creation. It only took seconds to write this mail with AI. It requires only a bit of tweaking and it’s ready to go as a phishing bait.

Deep Fakes Videos and AI Generated Image: AI can now create deep fake videos that can be used to spread misinformation or impersonate individuals. While videos are still a bit on the primary level, with powerful tools like MidjourneyAI it’s possible to create a pitch-perfect image which can trick even to most of the veteran human eyes. Recently such an event created quite a fuss worldwide when a photographer submitted an AI generated photo in a photography contest and actually won the competition!

Way to Safeguard From AI Generated Scams

As the sole purpose of AI generated text is that they appear authentic and convincing, AI-generated scams can often be difficult to detect. However, there are certain things you can do to protect yourself:

Avoid unsolicited messages: Be wary of messages or emails from people you don’t know, especially if they are offering you something that seems too good to be true.

Grow The Habit of Verifying Sources: Check the sender’s email address and verify if it’s legitimate. Scammers often use a slightly different email address that looks similar to the real one, so the habit of checking the authenticity of an email domain can save you a fortune.

Check the content, thoroughly: If you receive an email with a link or an attachment, don’t click on it without verifying the content. Hover over the link to see the URL, and scan the attachment with anti-virus software. Be wary of any malicious links.

Usage of two-factor authentication: Enable two-factor authentication on your accounts to prevent hackers from accessing your information even if they manage to get your password.

Always Update your software: As we’ve learnt earlier that operating systems and software are always evolving to face the cyberthreats, it’s your duty to Keep the computer and smartphone operating systems and antivirus software updated to protect against known security vulnerabilities.

Report suspicious activity: If you receive a suspicious message or email and can’t decide the authenticity for yourself, report it to the relevant authorities or contact the company it claims to be from to verify if it’s legitimate. This is beneficial in two ways, first, you get to be sure of the authenticity, there is no one stopping you to go forward if it’s a legitimate email. Furthermore, if it is actually a fraudulent email, you might be the first one to bring it to the attention of the authorities which might prevent thousands of future attacks.

By following these tips, you can reduce the risk of falling victim to AI-generated scams. It’s important to stay vigilant and cautious, as scammers are always coming up with new tactics to try and steal your personal information. If your personal information is stolen, there is rarely any remedy to that. To prevent these potential threats, it is important to stay informed about emerging AI-based threats and to take proactive steps to mitigate them.

- RaaS : The Dark Side of SaaS

- Hackers Target MOVEit Transfer’s Zero-Day Vulnerability, Emergency Patch Deployed

- How Scammers Are Utilizing ChatGPT? Few Tips To Be Safe

- World Backup Day: Why Data Backups are Important in Cybersecurity

- What is Social Engineering and How Cyber Criminals Use It

- Things To Know About Personally Identifiable Information (PII)

- What is Data Breach? Why and How It occurs? How To Prevent Data Breach